PostgresoL Blog

Ok you got a database, how do you optimize SQL performances? To answer this question you need a lot of time and effort in order to understand workloads and performance patterns, evaluate degradation and apply corrective measures. However there are standard practices that you can implement to improve performances. This SQL optimization guide will showcase some best practices that apply across almost every database and can be a good starting point to optimize your database workloads.

Optimize SELECT SQL queries by understanding the query execution plan

How

All modern databases, like MySQL and PostgreSQL®, define an optimal query execution plan based on the cardinality of the various tables involved and the auxiliary data structures available like indexes or partitions. Both MySQL and PostgreSQL provide a command called EXPLAIN to show the execution plan of a statement. From the execution plan, you can understand how tables are joined, if an index is used, if a partition is pruned and many other aspects of the query execution that could alter the performance. The query plan gives hints about the cost of each operation and can flag if an index is not being used.

To get the execution plan of a query, prefix the query with EXPLAIN like the following:

EXPLAIN SELECT idFROM ordersWHEREorder_timestamp between '2024-02-01 00:00:00' and '2024-02-03 00:00:00' OR status = 'NEW';Copy to clipboard

The database returns the plan showcasing, in this example, the usage of two indexes idx_order_date and idx_order_status and a BitmapOr between the two results.

QUERY PLAN--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- Bitmap Heap Scan on orders (cost=687.35..7149.18 rows=60333 width=4) Recheck Cond: (((order_timestamp >= '2024-02-01 00:00:00'::timestamp without time zone) AND (order_timestamp <= '2024-02-03 00:00:00'::timestamp without time zone)) OR (status = 'NEW'::text)) -> BitmapOr (cost=687.35..687.35 rows=60333 width=0) -> Bitmap Index Scan on idx_order_date (cost=0.00..655.75 rows=60333 width=0) Index Cond: ((order_timestamp >= '2024-02-01 00:00:00'::timestamp without time zone) AND (order_timestamp <= '2024-02-03 00:00:00'::timestamp without time zone)) -> Bitmap Index Scan on idx_order_status (cost=0.00..1.43 rows=1 width=0) Index Cond: (status = 'NEW'::text) (7 rows)Copy to clipboard

Warning

The database optimizer will generate an execution plan based on the cardinality estimates. The closer these estimates are to the data in the table, the better the database will be able to define the optimal plan. A plan can also change over time, therefore, when performance of a query suddenly degrades, a change in the plan could be a possible cause. The plan will also depend on the table and additional supporting structures like indexes being created in the database, in the following section we'll analyze how additional structures can impact the performance.

Golden rule

Spend time understanding the query execution plan to find potential performance bottlenecks. Update the statistics, automatically or manually using the ANALYZE command, to provide the database up-to-date information about the various tables load.

Optimize SELECT SQL queries by using indexes

How

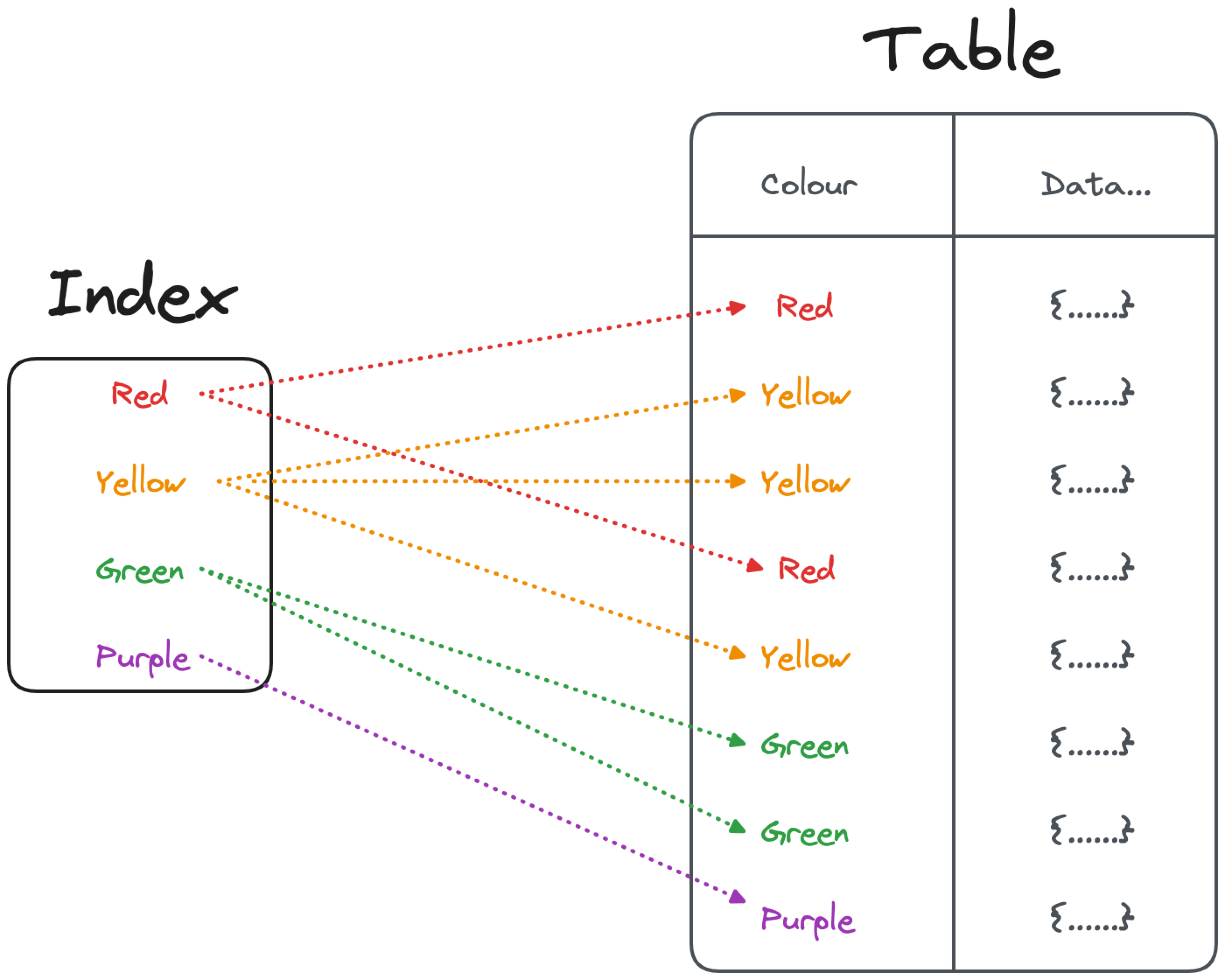

Several of the options defined above suggested the usage of indexes, but how should you use them? Indexes are a key performance booster when performing filtering, joining and ordering. Therefore it’s crucial to understand the query patterns and create proper indexes that cover the correct clauses. Both PostgreSQL and MySQL offer a variety of index types, each one having peculiar characteristics and being well suited for some use-cases.

Warning

As mentioned in the DELETE and INSERT sections, any index added on a table slows down write operations. Moreover, indexes occupy space on disk and require maintenance. Therefore think carefully about which workloads you want to optimize and what is the set of indexes that could give you the best results.

Golden rule

Unlike database tables, indexes can be dropped and recreated without losing data. Therefore it’s important to periodically evaluate the set of indexes and their status. To check indexes usage, you can rely on the database system tables, like PostgreSQL pg_stat_user_indexes or the MySQL table_io_waits_summary_by_index_usage, providing up-to-date statistics about queries impacting the indexes. Once you identify used and unused indexes, evaluate the need of restructuring them in cases of workload change.

Pro tip

Indexes can be used on single columns, multiple columns or even functions. If you’re looking to filter data using, for example, an upper(name) function, you can index the output of the function for better performance.

Aiven AI Database Optimizer can provide you index recommendations based on your database workloads.

Optimize SELECT SQL queries by improving joins

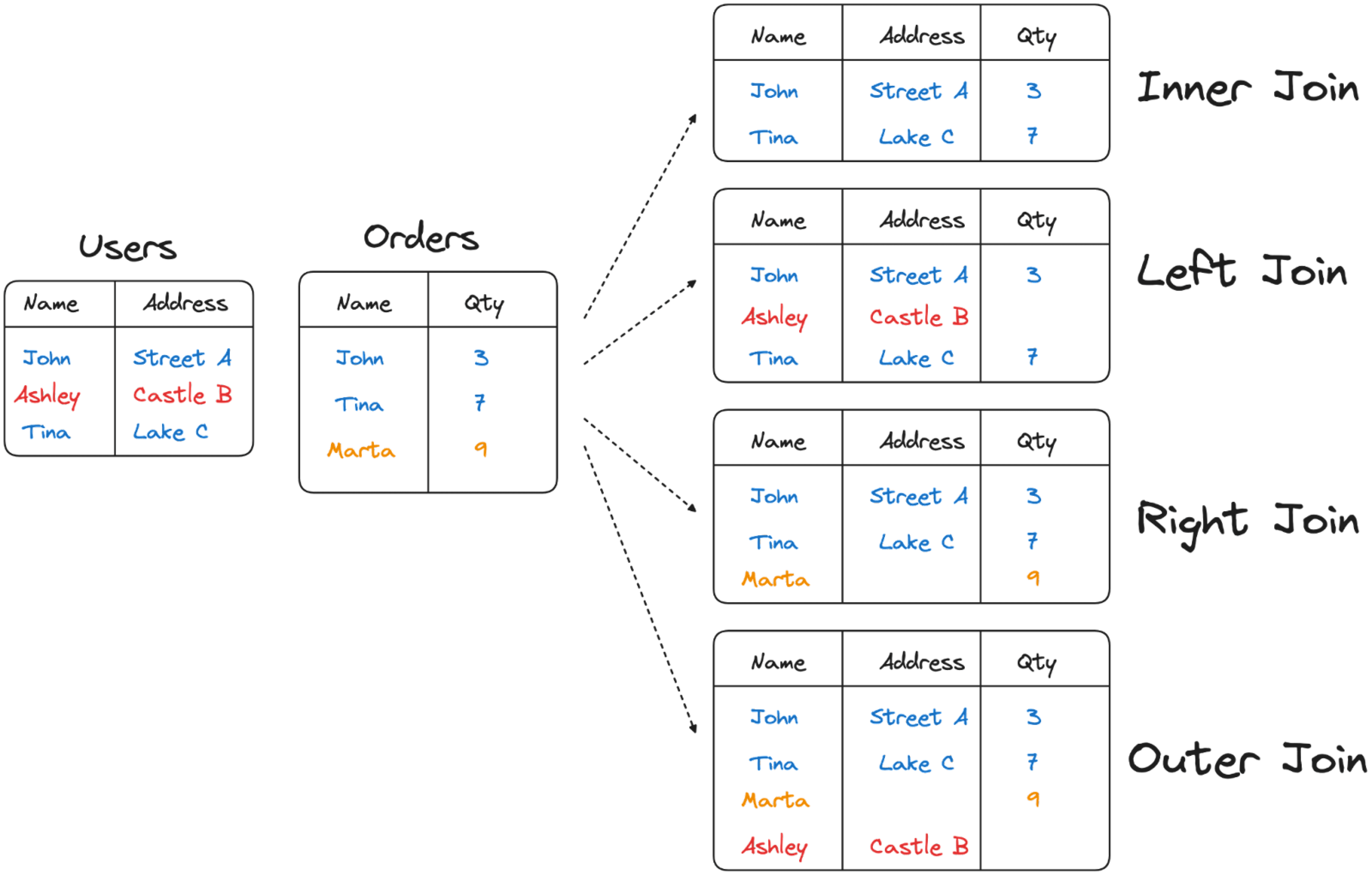

Joins are frequently used in relational databases to select data coming from disparate tables. Understanding what join types are available and what they imply is crucial to achieve optimal performances. The following set of suggestions will help you identify the correct one.

Optimize SELECT SQL queries by selecting inner joins

How

Both MySQL and PostgreSQL offer a variety of join types allowing you to precisely define the set of rows to retrieve from both sides of the join. All of them are useful for one or another reason but not all of them have the same performance. The INNER JOIN retrieving only the rows contained on both sides of the dataset usually has optimal performance. The LEFT, RIGHT, and OUTER joins on the other side, need to perform some additional work compared to the INNER JOIN therefore should be used only if really necessary.

Warning

Double check your queries, sometimes a query like the following seems legit:

SELECT * FROM ORDERS LEFT JOIN USERS ON ORDERS.NAME = USERS.NAMEWHERE USERS.NAME IS NOT NULL Copy to clipboard

The above is using a LEFT JOIN to retrieve all the rows from ORDERS, but then is filtering for rows having USERS.NAME IS NOT NULL. Therefore is equivalent to an INNER JOIN.

Golden rule

Evaluate the exact requirements for the JOIN statement, and analyze the existing WHERE condition. If not strictly necessary, prefer an INNER JOIN.

Pro Tip

Check also if you can avoid a join altogether. If, for example, we are joining the data only to verify the presence of a row in another table, a subquery using EXISTS might be way faster than a join. Check a detailed example in the How to speed up COUNT(DISTINCT) blog.

Optimize SELECT SQL queries by using the same column type for joins

How

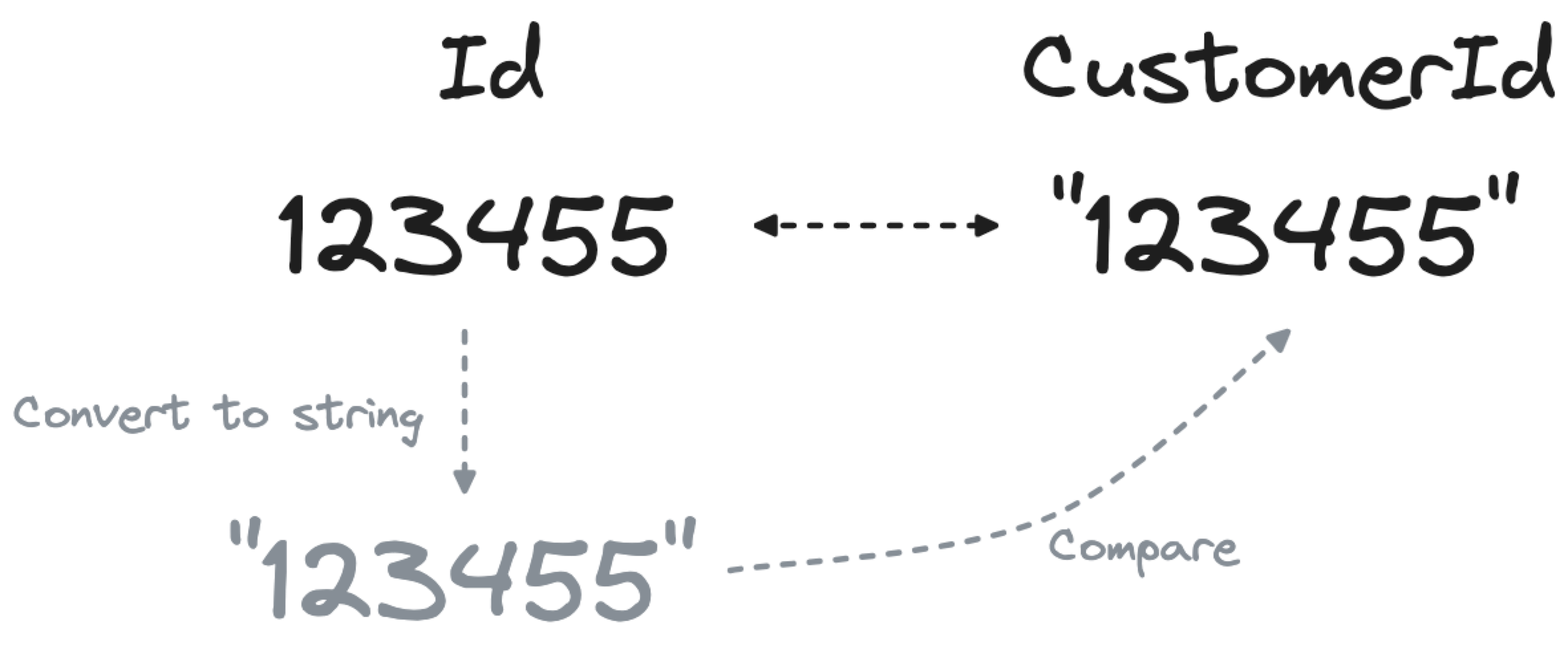

When joining two tables, ensure that the columns in the join condition are of the same type. Joining an integer Id column in one table with another customerId column defined as VARCHAR in another table will force the database to convert each Id to a string before comparing the results, slowing down the performance.

Warning

You can’t change the source field type at query time, but you can expose the data type inconsistency problem and fix it in the database table. When analyzing if the CustomerId field can be migrated from VARCHAR to INT, check that all the values in the column are integers indeed. If some of the values are not integers, you have a potential data quality problem.

Pro Tip

When in doubt, prefer more compact representations for your joining keys. If what you’re storing can be unambiguously defined as a number (e.g. a product code like 1234-678-234) prefer the number representation since it will:

Use less disk

Be faster to retrieve

Be faster to join since integer comparison is quicker than the string version

However, beware of things that look like numbers but don't quite behave like them - for instance, telephone numbers like 015555555 where the leading zero is significant.

Optimize SELECT SQL queries by avoiding functions in joins

How

Similarly to the previous section, avoid unnecessary function usage in joins. Functions can prevent the database from using performance optimizations like leveraging indexes. Just think about the following query:

SELECT * FROM users JOIN orders ON UPPER(users.user_name) = orders.user_nameCopy to clipboard

The above uses a function to transform the user_name field to upper case. However this could be a signal of poor data quality (and a missing foreign key) in the orders table that should be solved.

Warning

Queries like the one above can showcase a data quality problem solved at query time which is only a short term solution. Proper handling of data types and quality constraints should be a priority when designing data backend systems.

Golden rule

In a relational database, the joins between tables should be doable using the keys and foreign keys without any additional functions. If you find yourself needing to use a function, fix the data quality problem in the tables. In some edge cases using a function in conjunction with an index could help to speed up the comparison between complex or lengthy data types. For example, checking the equality between two long strings could potentially be accelerated by comparing initially only the first 50 characters, using the joining function UPPER(SUBSTR(users.user_name, 1, 50)) and an index on the same function.

Optimize SELECT SQL queries by avoiding joins

How

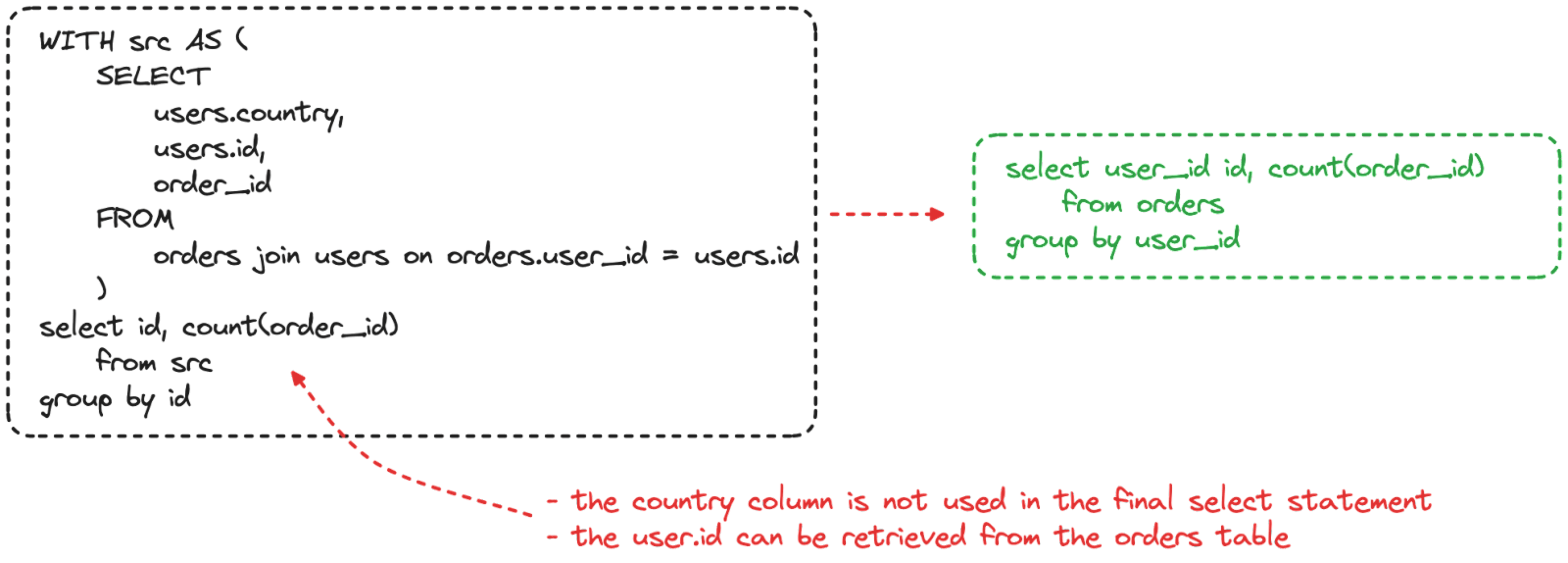

Queries can be built over time by different people and have a lot of sequential steps in the shape of CTE (common table expression). Therefore it might be difficult to understand the actual needs in terms of data inputs and outputs. Most of the time, when writing a query, you can add an extra field “just in case it is necessary” at a later stage. However this could have tremendous effects on performance if the field is coming from a new table requiring a join.

Always evaluate the strict data needs of the query and include only the columns and the tables which contain this information.

Warning

Double check if the join is needed to filter rows existing in both tables. In the example above, we could end up with incorrect results if there are user_id present in the orders table that are not stored in the id column of the users table.

Golden rule

Remove unnecessary joins. It is far more performant to generate a slimmer query to retrieve the overall dataset and then perform a lookup for more information only when necessary.

Pro Tip

The example explained above is just one case of JOIN overuse. Another example is when we are joining the data only to verify the presence of a row in another table. In such cases a subquery using EXISTS might be way faster than a join. Check a detailed example in the How to speed up COUNT(DISTINCT) blog.

Optimize SELECT SQL queries by improving filtering

After analyzing the JOIN condition it is now time to evaluate and improve the WHERE part of the query. Like the section above, subtle changes to the filtering statement can have a massive impact on query performance.

Optimize SELECT SQL queries by avoiding functions in filtering

How

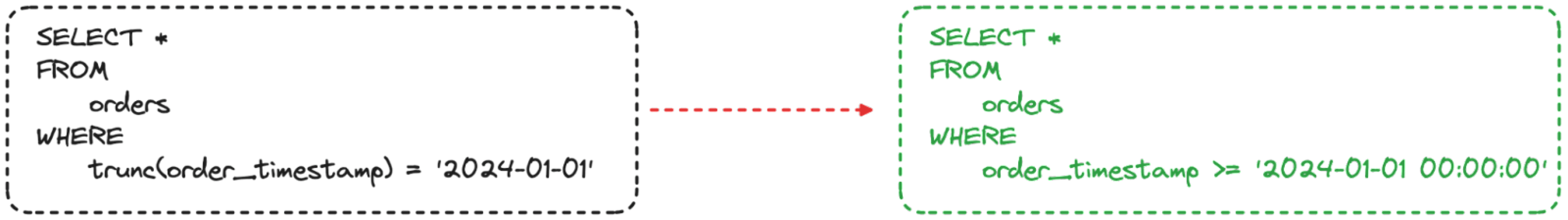

Applying a function to a column in the filtering phase slows down the performance. The database needs to apply the function to the dataset before filtering. Let’s take a simple example of filtering on a timestamp field:

SELECT count(*) FROM ordersWHERE CAST(order_timestamp AS DATE) > '2024-02-01';Copy to clipboard

The above query on a 100.000.000 row dataset runs in 01 min 53 sec because it needs to change the data type of the order_timestamp column from timestamp to date before applying the filter. But, that’s not necessary! As EverSQL by Aiven suggests, if you give it the above query and table metadata, it can be rewritten to:

SELECT count(*) FROM ordersWHERE order_timestamp > '2024-02-01 00:00:00';Copy to clipboard

The rewritten query uses the native timestamp field without casting. The result of such small change is that the query now runs in 20 sec, nearly 6 times faster than the original.

Warning

Not all functions can be avoided, since some might be needed to retrieve parts of the column value (think about substring examples) or to reshape it. Nevertheless, every time you are about to add a function in a filter, think about alternative ways to use the native data type operators.

Golden rule

When applying a filter to a column, try to reshape the filter format rather than the column format.

The above is a perfect example: moving the filter format from the date 2024-02-01to the timestamp 2024-02-01 00:00:00 allowed us to use the native timestamp data format and operators.

Pro Tip

If applying the function is a must, you can try the following two options:

Create an index on the expression, available in PostgreSQL and MySQL

Use database triggers to populate an additional column with the transformation already in place

Conclusion

Optimizing SQL statements can be a tedious task: you need to deeply understand both the database structures and the type of workload to optimize in order to achieve good results. Moreover, while optimizing a specific workload (for example an INSERT statement), you could impact the performance of other queries with different access patterns (like a SELECT or DELETE).

To have an holistic view of your data assets and supporting structures, understand performance variations and receive automatic, AI-assisted SQL optimization suggestions you can use EverSQL by Aiven. The EverSQL sensor, installed on any PostgreSQL and MySQL database, allows you to monitor slow queries and receive performance insights. The AI-driven engine analyzes your slow SQL statements, together with the existing supporting data structures, and provides both index recommendation and SQL rewrite suggestions to improve the performance.