PostgresoL Blog

Forward

The checkpoint of postgresql is a very important part of the database, it involves all aspects of database caching, persistence and data recovery. It involves a lot of disk IO during execution, so optimizing and monitoring it is indispensable. This article describes the principles of checkpoint and its monitoring, which will be very helpful for tuning.

Introduction to Checkpoint

postgresql persists data to disk, but because disk read and write performance is poor, it adds a layer of caching. This way, every read and write to the data will be prioritized in the cache, and if the data does not exist in the cache, it will only be looked up from disk. Although this greatly improves performance, but the cache does not have the persistence of the disk, in the machine power failure will be lost.

To solve this problem, postgresql introduces the wal logging mechanism, which combines the characteristics of sequential writing to disk. Each time data is modified, a log is recorded and stored in the wal file, which contains the modified data. wal data is written incrementally to take full advantage of the high efficiency of sequential writes. In this way, even if the database quits unexpectedly, you can use wal to recover the data.

When the database is restarted, you only need to replay all the previous wal logs. However, wal logs can become very large over time, which can lead to long recovery times. To address this problem, postgresql provides a checkpoint mechanism that periodically flushes the cache to disk. Recovering data only requires replaying the wal logs from the refresh point, and the previous wal logs are no longer useful and can be reclaimed.

Trigger conditions

The checkpoint trigger conditions are categorized as follows.

Time Trigger: the background checkpoint process will check the time regularly, if the interval between the last checkpoint execution and the start of the checkpoint is more than the specified value, then the checkpoint will be triggered. this specified value is the value of checkpoint_timeout in the configuration file, the range is 30s ~ 1 day, the default value is 300s.

wal log: checkpoint will be triggered when the distance between the latest wal log and the refresh point of the last checkpoint is greater than the specified value. the size of the trigger value will be mentioned in the following checkpoint_complete_target.

Manual Trigger: The checkpoint command is also triggered when the user executes the command, which must be executed by the superuser.

Database shutdown: A checkpoint is triggered when the database is shut down normally.

Base Backup: When performing a base backup of the data, the pg_start_backup command will be executed, triggering a checkpoint.

Database Crash Repair: After the database exits abnormally, for example, the database process is kill -9, it is too late to clean up the operation . When restarting, it will perform crash repair and trigger checkpoint after the repair is completed.

Monitor

For checkpoint monitoring, it is recommended to listen to the log, which will record detailed information about each checkpoint. You need to set log_checkpoints=on in your postgresql.conf configuration file so that each time a checkpoint is executed, it prints the relevant information at the beginning and at the end.

The log at the beginning of the checkpoint records the reason why the checkpoint was triggered and the rest of the flag bits. For example, the following log indicates that the checkpoint was triggered by a timeout

< 2019-11-23 13:59:02.448 CST > LOG: checkpoint starting: time

The log at the end of the checkpoint records the number of cache flushes, the usage time, and the number of changes to the wal segment file.

< 2019-11-23 13:59:39.548 CST > LOG: checkpoint complete: wrote 371 buffers (2.3%); 0 transaction log file(s) added, 0 removed, 0 recycled; write=37.082 s, sync=0.006 s, total=37.099 s; sync files=56, longest=0.003 s, average=0.000 s; distance=3436 kB, estimate=3436 kB

In addition to listening to the logs, the pg_stat_bgwriter view stores checkpoint statistics, which is a single row of data that is dynamically updated. The following shows the columns associated with checkpoint

| Column | Type | Description |

|---|---|---|

checkpoints_timed | bigint | Number of times triggered because of time |

checkpoints_req | bigint | Number of times triggered for other reasons |

checkpoint_write_time | double | Time taken to flush the cache to the filesystem cache |

checkpoint_sync_time | double | Time spent flushing the file system cache corresponding to the cache to disk |

buffers_checkpoint | bigint | Number of flushes to disk |

buffers_backend | bigint | Number of sync requests received |

buffers_backend_fsync | bigint | The number of times the backend needs to perform fsync operation itself because sending sync request failed. |

stats_reset | timestamp | Update time |

Checkpoint_complete_target configuration item

Here we need to introduce checkpoint_complete_target configuration item in detail, which is more important and complicated in checkpoint optimization. We know that checkpoint execution will take up system resources, especially disk IO, so in order to minimize the impact on the system, IO speed limit will be applied. If checkpoint_complete_target is enabled, then the checkpoint does not need to complete immediately, it will control the completion time to checkpoint_timeout_ms*checkpoint_complete_target, so that the disk IO can run smoothly. so that disk IO can run smoothly and be contained within a certain range of impact.

If the checkpoint does not complete immediately, then the old wal logs cannot be deleted immediately. Since max_wal_size specifies the maximum size of the wal log, we need to lower the value of the checkpoint trigger due to the wal being too large, because when the checkpoint is executed, new wal logs are generated. Obviously, the peak wal size is the point at which the checkpoint is about to complete, because that is the point at which the triggered wal logs are included, plus any new wal logs that have been added. Assuming the trigger value is trigger_wal_size, the maximum size of wal logs added during the checkpoint_timeout is trigger_wal_size, and assuming that wal logs grow at the same rate, the size of wal logs grown at this point is trigger_wal_size * checkpoint_completion_timeout * checkpoint_completion_timeout * checkpoint_completion_timeout * checkpoint_completion_timeout * checkpoint_completion_timeout checkpoint_completion_target. to ensure that the wal log size is equal to max_wal_size at the peak time, you can compute the trigger value

trigger_wal_size + trigger_wal_size * checkpoint_completion_target = max_wal_size; trigger_wal_size = max_wal_size / (1 + checkpoint_completion_target)

Of course to ensure that the completion time is within checkpoint_timeout_ms * checkpoint_complete_target, a speed limit is required when performing a cache flush to a file. The details of speed limiting are covered below.

Checkpoint process

postgresql creates a background process that handles checkpoint.

The checkpoint process periodically checks to see if the time trigger conditions have been met, and it also checks to see if there are any requests sent from other processes. For example, the recovery process is responsible for repairing database crashes, and after a successful recovery, it sends a checkpoint request to shared memory. the checkpoint process periodically checks for requests and handles them.

Checkpoint request

The checkpoint request is simply represented by a variable of type int, named ckpt_flags. it is used as a flag bit, where the bits correspond to different trigger causes

#define CHECKPOINT_IS_SHUTDOWN 0x0001 /* Checkpoint triggered because database shutdown is complete */ #define CHECKPOINT_END_OF_RECOVERY 0x0002 /* Checkpoint triggered because database recovery is complete */ #define CHECKPOINT_FORCE 0x0008 /* Checkpoint triggered because database recovery completed */ #define CHECKPOINT_CAUSE_XLOG 0x0080 /* Checkpoint triggered because wal log is too large */ #define CHECKPOINT_CAUSE_TIME 0x0100 /* checkpoint triggered because of timeout */ #define CHECKPOINT_IMMEDIATE 0x0004 /* Disable speed limit on cache flushing */ #define CHECKPOINT_FLUSH_ALL 0x0010 /* Flush all caches */ #define CHECKPOINT_WAIT 0x0020 /* The process waits for the checkpoint to finish */ #define CHECKPOINT_REQUESTED 0x0040 /* Indicates that it has been requested before */

Checkpoint Lock

Each time a checkpoint is executed, it needs to acquire the checkpoint lock and release it when it's done. This way, only one checkpoint operation is executed at any given time.

Refresh Dirty Pages

After acquiring the lock, the checkpoint process flushes the cached data to disk.

It iterates through all the cached pages of data, adds a checkpoint_need flag bit if the page contains dirty and pernament flag bits, and saves them to a linked table.

The caches in these chained lists need to be flushed to disk. postgresql sorts these caches according to their corresponding disk locations in order to take advantage of the speed of sequential disk writes.

The sorted caches are flushed to disk in order, and the dirty, pernament, and checkpoint_need flags are cleared.

Refresh speed limit

postgresql will try to ensure that the time to refresh the cache is kept around checkpoint_timeout_ms * checkpoint_complete_target, and the following calculates the percentage that should have been completed at that point. On each cache page refresh, the current progress is compared. If it is faster than timeout_progress, it needs to hibernate for a while. If it is slower than that, it will not hibernate.

timeout_progress = (now - checkpoint_start_time) / (checkpoint_timeout_ms * checkpoint_complete_target);

The checkpoint is also triggered if the wal size grows beyond trigger_wal_size. postgresql will look at the wal's new size in real time to make sure that the cache is flushed faster than the wal log grows. We allow the log to grow no larger than trigger_wal_size * checkpoint_complete_target when the checkpoint completes. Then the log grows at the rate of

wal_progress = (latest_wal_pointer - checkpoint_start_wal_pointer) / (trigger_wal_size * checkpoint_complete_target);

Whenever a buffer is refreshed, its progress is updated progress = buffer_processed_num / buffer_to_process_total. then the size of progress is compared to timeout_progress and wal_progress, and if all meet the progress If they are all up to speed, then they will pause for a while to slow down the refresh rate. This approach minimizes the impact of checkpointing on the system.

Disk Refresh

Notice that the previous section only flushes the postgresql cache to the filesystem; it does not guarantee that the data is persisted to disk, because the filesystem itself will have a cache.

When a buffer is flushed, postgresql sends a sync request. checkpoint executes the sync request and flushes the filesystem cache to disk.

Generate checkpoint wal logs

After the disk is flushed, postgresql generates a wal log with information about the checkpoint. It has a lot of information, including information about the current transaction ID (current maximum transaction ID, minimum transaction ID, transaction time, transaction frozen, etc.), and the following is just a description of some of these fields

typedef struct CheckPoint XLogRecPtr redo; /* The latest wal log position at the start of checkpoint */ XLogRecPtr redo; /* Latest wal log position at the start of checkpoint execution */ TimeLineID ThisTimeLineID; /* timeline id */ bool fullPageWrites; /* Whether the full page wal feature is enabled */ pg_time_t time; /* checkpoint time */ // Transaction related information ...... } checkPoint.

Note the redo member here. Every time the database is restored, the wal log is replayed from this location.

Update pg_control file

The pg_control file holds important information that is utilized by the database startup to

ControlFile->checkPoint = ProcLastRecPtr; // location of the file where the checkpoint wal log is located ControlFile->checkPointCopy = checkPoint; // checkpoint wal log's contents ControlFile->time = (pg_time_t) time(NULL); // update time ControlFile->minRecoveryPoint = InvalidXLogRecPtr; // InvalidXLogRecPtr means that the database needs to be restored to the latest wal log at startup. ControlFile->minRecoveryPointTLI = 0; // Indicates that the database needs to be restored to the latest timeline id for startup.

Deleting old wal logs

The wal logs before the checkpoint.redo location, corresponding to the data changes have been saved to disk, so this part of the wal logs can be deleted or recycled. Before deleting or recycling, you also need to check whether these wal logs have been successfully archived (if archive is enabled), and also check whether the slave repository has received these wal logs (if stream replication is enabled).

Recycling is not the same as deleting, it is just renaming the file to the name of the next wal segment.

Check archive timeout

If wal archive is enabled, the archive timeout is also checked here. Originally, wal archive will be triggered only when the wal log reaches a certain size, which is 16MB by default. However, if the data modification is slow, it will take a long time for the wal log size to reach 16MB. The wal archive is usually used for incremental backups or running from a repository, so if you don't get updates for a long time, it will make the backup interval large. To solve this problem, postgresql provides an archive_timeout setting that guarantees a timeout for triggering an archive.

Checkpoint

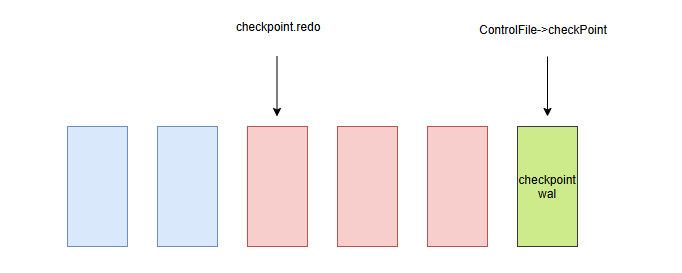

At the beginning of each checkpoint, the current location of the most recent wal log is recorded, called the checkpoint (redo). When the database is recovered, the wal log is played back from the checkpoint.

The blue squares on the left of the image above represent the wal data at the beginning of the checkpoint. Since checkpoint does not affect user requests, the red squares afterward represent subsequent requests.

When postgresql modifies the cache, it records the corresponding wal logs, so once the checkpoint is complete, the changes in the blue wal logs are guaranteed to be persisted to disk.

When the checkpoint completes, the location of the checkpoint is recorded and saved in the checkpoint wal log. To make it easier to find the checkpoint's data when the database is restored, its location is stored in the pg_control file.

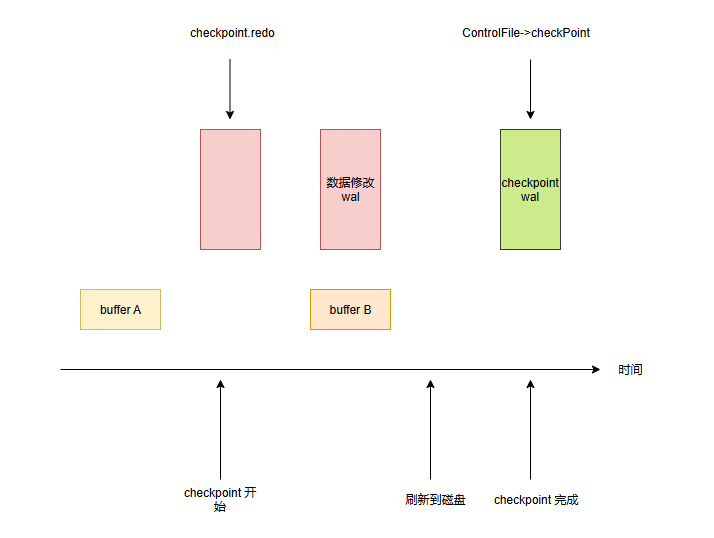

There is an additional concept involved in data recovery. Suppose, in the following scenario, when the data corresponding to a buffer is flushed to disk after the checkpoint has started, the user executes an insert request that modifies the data in the buffer.

Suppose that the database crashes, and when it recovers, it starts at a checkpoint. It first reads data b from disk and then encounters the wal log corresponding to the insert statement. It needs to know that the modification corresponding to the wal log has been successfully persisted, otherwise a recovery error will occur

Here we need to introduce the header of the buffer, which has a special attribute, pd_lsn, indicating the location of the last modified wal log. When it performs recovery, it will first check the position of pd_lsn and the wal log, if it finds that pd_lsn is large, then it will ignore the wal log. This way, no recovery error will occur.